Some tools can be more productive than others. Throughout our experience in implementing an optimal machine learning pipeline in production, we have learned to appreciate the raw strength of the combination of Savia HANA with SAP Data Services. The amount of time that can be saved by reformulating the approach and optimizing it to use this combination is significant, compared to a vanilla approach involving usage of Python for data wrangling, cleaning, discovery, and normalization, which are significant aspects of machine learning pipeline development.

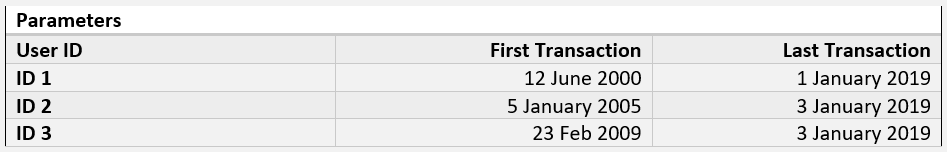

Consequently, we learned to split tasks between the tools depending on the requirements, in order to utilize each tool to its maximum potential and minimize the time required to run the pipeline. One of the strengths of SAP Data Services is its ability to run Python scripts, which are extremely powerful, for they enable us to integrate our full pipeline with modifications run on SAP HANA with Python. In the next part, we will demonstrate the time required to perform the same task between Savia HANA and Python. This is for one stage only; this task features the following parameters:

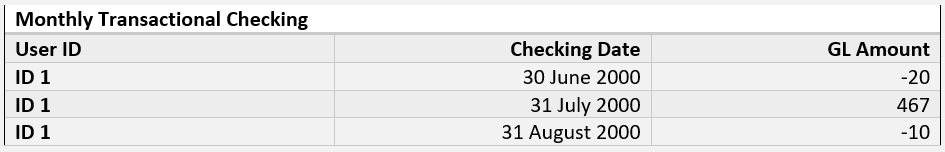

El script debería de mostrar los siguientes parámetros en la tabla y generar una agregación mensual del comportamiento de cada usuario, convirtiéndolos en una tabla como la mostrada a continuación:

Con este método, podemos chequear el fin de cada mes para discernir si el usuario está endeudado. Como poseemos datos para aproximadamente 180,000 cuentas, estos comandos tomarían tiempo ya que cada usuario haría numerosas transacciones a lo largo de un máximo de nueve años.

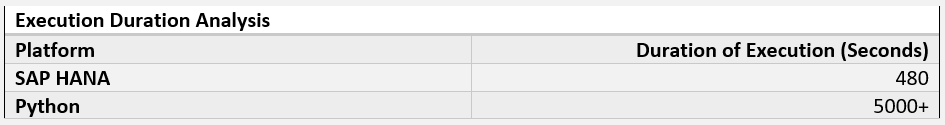

The table below provides a comparison between the time required to execute this task on Python vis-a-vis Savia HANA. Please note that Python offers multiple approaches to complete this task. The first method involves appending to the SAP HANA table directly using the HDBCLI library. However, given the amount of data being processed, this approach would be rather time-consuming.

Consequently, we will use the second method of appending to a comma-separated values (CVS) file using the PANDAS.tocsv() function. This method is faster, though it should be mentioned that we are not including the time needed to load from the CSV to the table. We are only accounting for the time required for the script to output the results.

As shown above, we observed that Python required 5000 seconds for this single process, whereas it took Savia HANA a mere 480 seconds! We understood this was unacceptable due to several issues that emerge when dealing with large volumes of data in Python. Some of these are the following:

El volumen de datos extraídos es muy grande para ser almacenado mediante la memoria, por ello debe ser almacenado en lotes. Sin embargo, cuando esto se hace, incurrimos en sobrecargas de tiempo a nuestra ejecución debido a los comandos de SELECCIONAR hechos usando la conexión. Por consiguiente, esta es una espada de doble filo.

If a multi-processing approach is employed, we encounter the issue of running multiple large SELECT statements from Savia HANA, which requires more time to execute. Multi-processing also eliminates the ability to load data directly into Savia HANA using INSERT statements with HDBCLI. Therefore, we would need to output the data into separate files before merging them, as we cannot have two processes writing into the same file concurrently. Moreover, we encounter the same memory bottle neck. These multiple processes require programming in order to keep the memory in consideration, therefore preventing the script from crashing. This adds yet another layer of complexity.

Though we employ Data Types Optimization on Pandas to reduce the amount of time required to execute, there is still sluggishness given the merger between transactional data and dimensional/feature data.

Most important are the management and maintenance-related problems arising from situations involving large Python scripts, such as the above, which involve numerous data manipulations. One important issue that can arise involves an error occurring in an iteration at the row 3,000,000, for example. It is very difficult to maintain errors such as these and deal with them.

These issues required an optimal solution that would be superior to the vanilla Python approach. We needed to shift our implementation methodology to utilize Savia HANA and SAP Data Services more. We were surprised by how easy this was and how strong these tools were in combination, to the point that our pipeline is now almost fully implemented on these tools. The pipeline is currently about 90% complete; it utilized the three platforms together in an efficient manner and saved us a significant amount of time that would have been wasted on managing scripts.

Aún más, el flujo de trabajo se mantuvo con un enfoque que involucraba etapas claras, así que si nos encontrábamos con un error o problema en una etapa, podíamos inmediatamente ubicarlo y atender el problema sin que afecte a otros.

With this said, it should be remembered that Python is still very powerful in specific cases, with some of them being:

Rendimiento de modificaciones de lógica difícil o personalizada a partes pequeñas de los datos. Tal como la técnica de redistribución que introducimos en el artículo anterior.

La instalación y administración del modelo de aprendizaje automatizado.

Reunir datos/ recolectar datos de la web, un asunto que discutiremos a profundidad en otro artículo.

The following diagram shows the implementation of the pipeline (“In Progress”) on SAP Data Services:

Puedes notar inmediatamente que esto se ve significativamente mejor que el método de administración de script. Podemos ver cada paso único, y qué es lo que hace. También puedes examinar a fondo para ver las entradas y salidas fijas de cada etapa. De tal manera, si una etapa requiere de alguna modificación, estas pueden hacerse independientemente en esta sola etapa. Mientras que el resultado no sea afectado, la siguiente etapa no requerirá de modificaciones por lo normal. En términos de administración, este es uno de los métodos más efectivo para implementar la manipulación de datos para propósitos de aprendizaje automático.

En resumen, el cambio en nuestro enfoque nos trajo numerosos beneficios, los cuales incluyeron:

Ahorrar mucho tiempo en desarrollo y mantenimiento al reducir grandemente el tiempo de ejecución del flujo de trabajo.

Simplifying the look and feel of the pipeline by using SAP Data Services.

Retaining the same strengths afforded to us using Python, as all the coding performed on Savia HANA is done using Structured Query Language (SQL).

¿Merece SAP HANA la corona como el rey de las implementaciones de ciencia de datos? Dinos tus opiniones en los comentarios. No discutiremos los detalles de cada etapa de la implementación ya que esto va más allá del alcance de un solo artículo. ¡Planeamos lanzar una serie extensa detallando cada etapa de nuestro enfoque así que sigue al tanto!